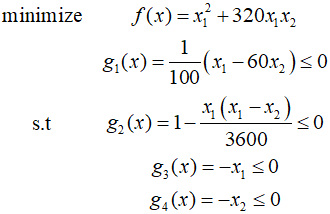

One of the numerical methods for constrained optimization design, the CSD algorithm, calculates step size using the golden section search. While this method is quite effective among interval reduction techniques, it's inefficient for complex engineering applications due to the need for too many function evaluations. Therefore, most practical algorithm implementations use an inexact line search to determine an acceptable but approximate step size. In this post, I aim to explain that method through an example.

Given ${x^{(0)}} = (40,0.5)$ with search direction ${{\text{d}}^{(0)}} = (25.6,0.45)$, the Lagrange multiplier vector for the constraints can be calculated using the Lagrange multipliers, with ${\text{u}} = {\left[ {16300\,\,\,19400\,\,\,0\,\,\,0} \right]^T}$. Setting $\gamma {\text{ = 0}}{\text{.5}}$, we use the inexact line search procedure to calculate the step size for the design change.

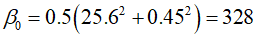

Since we have the Lagenage multipliers for the constraints, the initial value of the panalty paramter can be calculated as follows.

The same value of $R$ is used on both sides of the descent condition, and the same $R$ value is used throughout the step size calculation procedure during the current iteration. The constant ${\beta _0}$ can be calculated as follows.

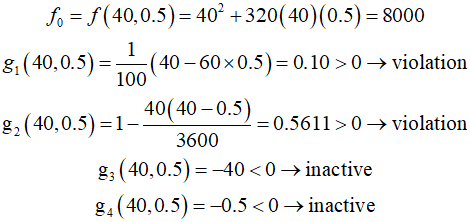

At ${x^{(0)}}$, the cost function and constraint function can be calculated as follows.

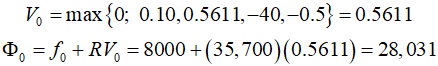

The maximum constraint violation and the current descent function can be calculated as follows.

Setting $j=0$, the trial step size ${t_0} = 1$. The trial design point in the search direction can be calculated as follows.

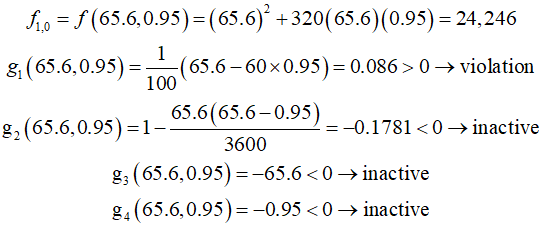

The cost function and constraint functions here can be calculated as follows.

The maximum constraint violation and the current descent function can be calculated as follows.

For the descent condition, the following equation can be obtained.

Therefore, the inequality equation is satisfied, and the step size ${t_0} = 1$ is permitted. If the inequality equation is violated, the step size should be changed to ${t_0} = 0.5$ and the process repeated.

'Optimization Technique' 카테고리의 다른 글

| 130_Projection Methods (0) | 2024.04.08 |

|---|---|

| 129_Optimality Conditions (0) | 2024.04.07 |

| 127_Search Direction (0) | 2024.04.05 |

| 126_Potential Constraint Set (0) | 2024.04.04 |

| 125_Linearized Subproblem (0) | 2024.04.03 |