Understanding distributions in deep learning is important in several ways. Firstly, since data itself follows various probability distributions, understanding these distributions helps in grasping the intrinsic characteristics of the data. For example, exponential or Laplace distributions reflect certain features that real-world data can possess.

This understanding is essential for effectively processing data and for choosing or designing appropriate models.

Moreover, deep learning models, especially Bayesian deep learning models, use a probabilistic approach to model the uncertainty in data and estimate the confidence level of predictions. In such approaches, distributions play a central role. For instance, understanding how the noise or uncertainty in data affects the predictions of a model is crucial.

Additionally, the loss functions used in the training process of models often assume that the data errors follow a specific probability distribution. These assumptions influence the choice of loss functions and the optimization strategies of the model. For example, mean squared error assumes a normal distribution, while other loss functions may assume different types of distributions.

Lastly, generative models like Generative Adversarial Networks (GANs) or Variational Autoencoders (VAEs) use a method of sampling from specific distributions to generate new data points. In these cases, understanding the distribution that data follows directly impacts the performance of the model.

Therefore, in deep learning, understanding distributions plays a key role in various aspects such as data analysis, model design, and optimization of the learning process. This knowledge is essential for building and training effective deep learning models.

Thus, in this post, we will briefly explore some basic distributions through a few examples.

Probability densities are used in various forms and are inherently very important. The simplest form is when $p(x)$ is a constant independent of $x$. However, such distributions diverge and cannot be normalized.

Distributions that cannot be normalized are referred to as improper distributions.

Where $c$ and $d$ are the boundaries of the given interval, and they have a uniform probability within the interval.

Another simple form of a probability density function is the exponential distribution, which can be represented as follows.

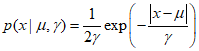

One variation of such an exponential distribution function, the Laplace distribution, allows for shifting the peak to $\mu $.

Additionally, the distribution of the Dirac delta function can be represented as follows.

The distribution of the Dirac delta function is defined as 0 everywhere except at $x = \mu $. Using the Dirac delta function and finite values of $x$ in a given domain, the following empirical distribution can be obtained.

ref : Chris Bishop's "Deep Learning - Foundations and Concepts"

'Deep Learning' 카테고리의 다른 글

| 55_Likelihood Function (0) | 2024.01.24 |

|---|---|

| 54_Linear Regression (0) | 2024.01.23 |

| 53_Bernoulli, Binomial, and Multinomial Distribution (0) | 2024.01.22 |

| 52_Gaussian Distribution (0) | 2024.01.21 |

| 50_Probability (0) | 2024.01.19 |