Time series data typically captures a series of data points recorded at consistent intervals. When dealing with such time series data, Long Short-Term Memory (LSTM) models have become a highly useful and widely adopted approach. LSTM, a type of recurrent neural network (RNN), is effective for processing data sequences. In this blog post, we will explore how to use LSTM to predict future coronavirus cases based on actual data. This example uses the explanations and code from the book mentioned in the references below for detailed information.

import torch

import os

import numpy as np

import pandas as pd

from tqdm import tqdm

import seaborn as sns

from pylab import rcParams

import matplotlib.pyplot as plt

from matplotlib import rc

from sklearn.preprocessing import MinMaxScaler

from pandas.plotting import register_matplotlib_converters

from torch import nn, optim

The list of required libraries is as mentioned above, and since they have been explained in previous posts, individual explanations will not be provided. The data used is from the Johns Hopkins University Center for Systems Science and Engineering, which includes daily reported cases by country. Here, we will use only the time series data for confirmed cases.

# !wget https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_19-covid-Confirmed.csv

!gdown --id 1AsfdLrGESCQnRW5rbMz56A1KBc3Fe5aV

df = pd.read_csv('time_series_19-covid-Confirmed.csv')

df.head()

The data includes information such as provience/state, country, latitude, and longitude, but this information is not needed. Additionally, since the number of cases is cumulative, we will modify it to ensure it is not cumulative.

df = df.iloc[:,4:]

df.head()

daily_cases = df.sum(axis=0)

daily_cases.index = pd.to_datetime(daily_cases.index)

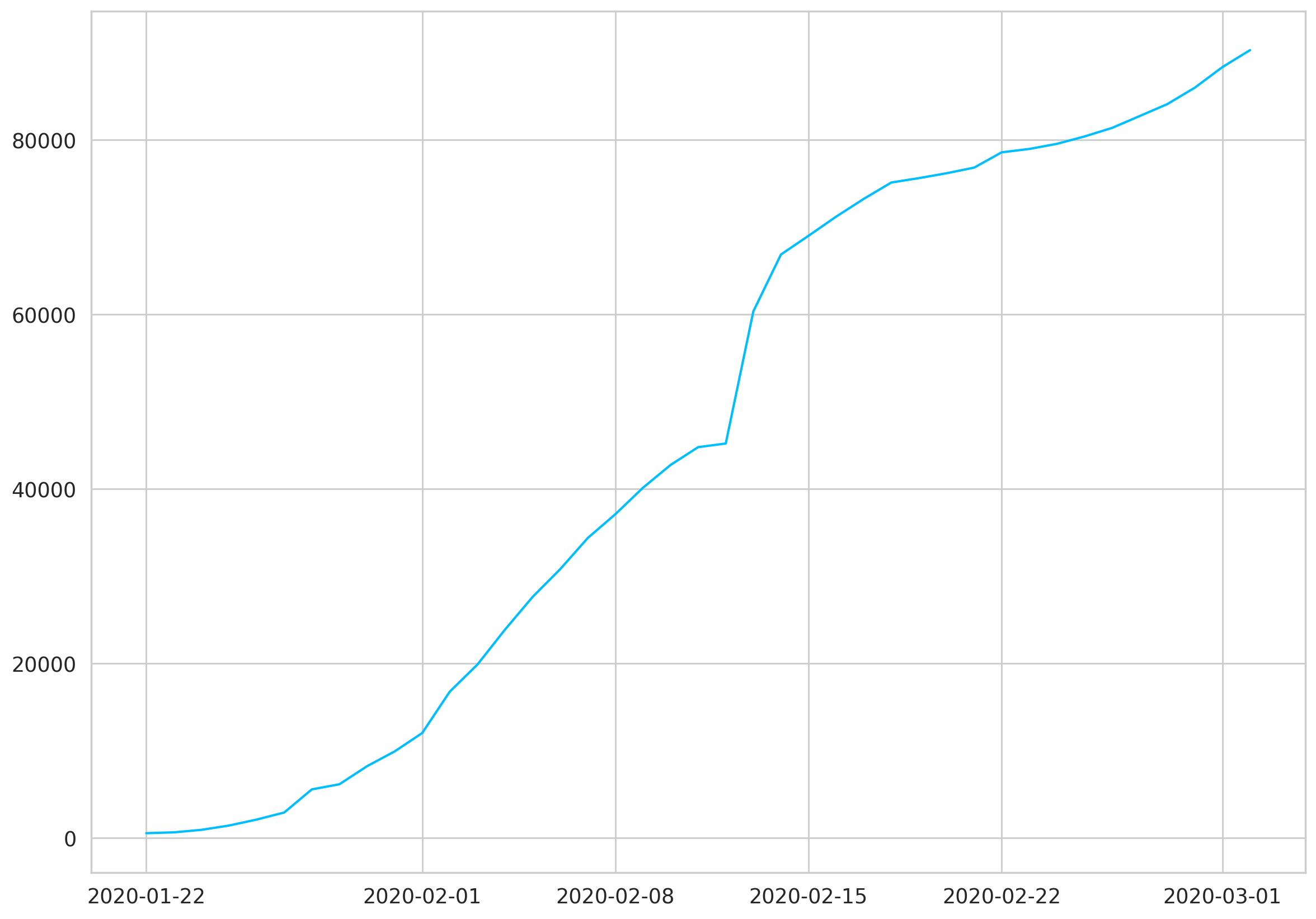

plt.plot(daily_cases)

It can be observed that the data is cumulative.

daily_cases = daily_cases.diff().fillna(daily_cases[0]).astype(np.int64)

plt.plot(daily_cases)

Using diff, we can easily adjust the data to make it non-cumulative.

test_data_size = 14

train_data = daily_cases[:-test_data_size]

test_data = daily_cases[-test_data_size:]

scaler = MinMaxScaler()

scaler = scaler.fit(np.expand_dims(train_data,axis = 1))

train_data = scaler.transform(np.expand_dims(train_data, axis = 1))

test_data = scaler.transform(np.expand_dims(test_data, axis = 1))

def create_sequences(data, seq_length):

xs = []

ys = []

for i in range(len(data) - seq_length - 1):

x = data[i:(i+seq_length)]

y = data[i+seq_length]

xs.append(x)

ys.append(y)

return np.array(xs), np.array(ys)

seq_length = 5

X_train, y_train = create_sequences(train_data, seq_length)

X_test, y_test = create_sequences(test_data, seq_length)

X_train = torch.from_numpy(X_train).float()

y_train = torch.from_numpy(y_train).float()

X_test = torch.from_numpy(X_test).float()

y_test = torch.from_numpy(y_test).float()

The code splits the time seires data into training and testing datasets, normalizes the data, and creates sequences to prepare it for model training. Finally, it converts the data into PyTorch tensors for use as model input.

class CoronaVirusPredictor(nn.Module):

def __init__(self, n_features, n_hidden, seq_len, n_layers=2):

super(CoronaVirusPredictor, self).__init__()

self.n_hidden = n_hidden

self.seq_len = seq_len

self.n_layers = n_layers

self.lstm = nn.LSTM(

input_size=n_features,

hidden_size=n_hidden,

num_layers=n_layers,

dropout=0.5

)

self.linear = nn.Linear(in_features=n_hidden, out_features=1)

def reset_hidden_state(self):

self.hidden = (

torch.zeros(self.n_layers, self.seq_len, self.n_hidden),

torch.zeros(self.n_layers, self.seq_len, self.n_hidden)

)

def forward(self, sequences):

lstm_out, self.hidden = self.lstm(

sequences.view(len(sequences), self.seq_len, -1),

self.hidden

)

last_time_step = \

lstm_out.view(self.seq_len, len(sequences), self.n_hidden)[-1]

y_pred = self.linear(last_time_step)

return y_pred

The code below defines an LSTM-based neural network model. Using nn.LSTM, the model structure and layers can be simply defined and initialized. The reset_hidden_state function initializes the hidden state and cell state by creating tensors with all values set to zero. The forward function passes the input through the LSTM layer to obtain the output and updates the hidden state. The last_time_step extracts the output from the last time step of the LSTM, and this is passed through the final linear layer to compute the prediction. Continued in the next post..

ref : Venelin Valkov - Get SH_T Done with PyTorch_ Solve Real-world Machine Learning Problems with Deep Neural Networks in Python-Venelin Valkov (2020)

'Deep Learning' 카테고리의 다른 글

| 173_Time Series Forecasting with LSTMs(2) (0) | 2024.05.22 |

|---|---|

| 171_Image Classification using Torchvision(4) (0) | 2024.05.20 |

| 170_Image Classification using Torchvision(3) (0) | 2024.05.19 |

| 169_Image Classification using Torchvision(2) (0) | 2024.05.18 |

| 168_Image Classification using Torchvision (0) | 2024.05.17 |