Continuing from the last posting, I will write the code and provide explanations.

def calculate_accuracy(y_true, y_pred):

predicted = y_pred.ge(.5).view(-1)

return (y_true == predicted).sum().float() / len(y_true)

def round_tensor(t, decimal_places = 3):

return round(t.item(), decimal_places)

The calculate_accuracy function calculates and returns the model's prediction accuracy by determining if each element in y_pred is greater than or equal to 0.5 and converting these elements to True or False. The ge function checks if each element is greater than or equal to 0.5. The view(-1) function changes the tensor to a 1-dimensional tensor. The number of True values is then counted, converted to a float, and divided by the total number of values to calculate accuracy. The round_tensor function takes a tensor t to be rounded and sets the number of decimal places according to decimal_places. It uses t.item() to convert the tensor t's value to a Python number and rounds it. These two functions calculate the model's prediction accuracy and round the calculated value to the specified number of decimal places to present the results concisely.

for epoch in range(1000):

y_pred = net(X_train)

y_pred = torch.squeeze(y_pred)

train_loss = criterion(y_pred, y_train)

if epoch % 100 == 0:

train_acc = calculate_accuracy(y_train, y_pred)

y_test_pred = net(X_test)

y_test_pred = torch.squeeze(y_test_pred)

test_loss = criterion(y_test_pred, y_test)

test_acc = calculate_accuracy(y_test, y_test_pred)

print(f'''epoch {epoch} Train set - loss: {round_tensor(train_loss)}, accuracy: {round_tensor(train_acc)}

Test set - loss: {round_tensor(test_loss)}, accuracy: {round_tensor(test_acc)}

''')

optimizer.zero_grad()

train_loss.backward()

optimizer.step()

In the training loop for training the neural network, training is conducted for 1000 epochs, using X_train as input to the network to calculate predictions. The squeeze function is used here to convert the tensor to a 1-dimensional tensor. The criterion compares the predicted values y_pred and the actual values y_train to calculate the loss. Every 100 epochs, the loss and accuracy on both the training and test sets are calculated and printed. train_acc calculates the accuracy for the training data, while y_test_pred uses X_test as input to the model to get the predicted values for the test data. test_acc calculates the accuracy for the test data. optimizer.zero_grad() resets the gradients from the previous step, train_loss.backward() calculates the gradients of the loss function through backpropagation, and optimizer.step() updates the model parameters using the calculated gradients.

This process allows the model to be trained iteratively while monitoring its performance.

classes = ['No rain', 'Raining']

y_pred = net(X_test)

y_pred = y_pred.ge(.5).view(-1).cpu()

y_test = y_test.cpu()

print(classification_report(y_test,y_pred,target_names=classes))

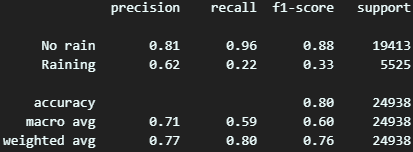

Set the names of the classified classes, here defined as 'No rain' and 'Raining'. Then, move the actual values and predicted values to the CPU and use classification_report to print the classification report.

In the case of 'No rain', the model works well, while for the 'Raining' class, the model does not perform well and produces unreliable predictions.

cm = confusion_matrix(y_test, y_pred)

df_cm = pd.DataFrame(cm, index=classes, columns=classes)

hmap = sns.heatmap(df_cm, annot=True, fmt = 'd')

hmap.yaxis.set_ticklabels(hmap.yaxis.get_ticklabels(), rotation=0, ha = 'right')

hmap.yaxis.set_ticklabels(hmap.yaxis.get_ticklabels(), rotation=30, ha = 'right')

plt.ylabel('True label')

plt.xlabel('Predicted label')

Use the confusion_matrix function to calculate the matrix comparing actual values and predicted values. Then, convert it into a DataFrame for easier visualization and use the heatmap function to visualize the confusion matrix as a heatmap. This allows for an intuitive understanding of the model's performance.

ref : Venelin Valkov - Get SH_T Done with PyTorch_ Solve Real-world Machine Learning Problems with Deep Neural Networks in Python-Venelin Valkov (2020)

'Deep Learning' 카테고리의 다른 글

| 169_Image Classification using Torchvision(2) (0) | 2024.05.18 |

|---|---|

| 168_Image Classification using Torchvision (0) | 2024.05.17 |

| 166_Simple Regression Model using PyTorch (0) | 2024.05.15 |

| 165_Debugging with Learning and Validation Curves (0) | 2024.05.14 |

| 164_K-fold Cross Validation (0) | 2024.05.13 |